IDE Imports Part 5 - Gradle GDK with Android Studio

IDE Imports Part 5 - Gradle GDK with Android Studio

This is the fifth of a series of posts discussing how to get Google Glass Mirror (Java) and GDK projects set up in various IDEs.

One of the most common questions that I get from people during workshops is how to get set up either the Mirror quick start, or the GDK project into Eclipse, Android Studio or IntelliJ.

This post will cover importing a Gradle GDK project into Android Studio.

0. Clone from GitHub

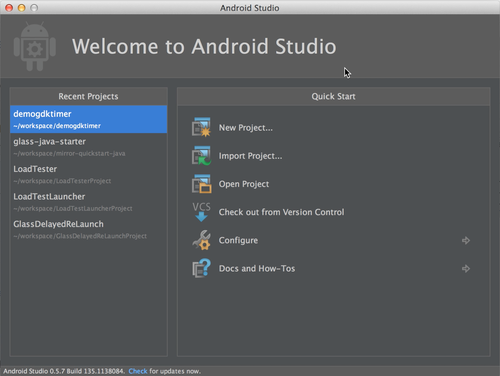

1. Create New Project

2. Set Android SDK to use GDK 19

3. Hit ‘Next’ a few times

Until the project is imported.

4. Import Module

Import the GDK project as a module, and it will automatically be placed in the correct spot, and the Gradle configuration handled correctly.

5. Delete the dummy project

No reason to keep it around now.

6. Add compileSdkVersion to build.gradle

The correct value is:

compileSdkVersion "Google Inc.:Glass Development Kit Preview:19"

7. Fix the AndroidManifest.xml

Need to have one Activity that is considered the launch Activity, otherwise IntelliJ won’t let you run.

8. Finished!

You’re all done!

IDE Imports Part 4 - Java Mirror with IntelliJ

IDE Imports Part 4 - Java Mirror with IntelliJ

This is the fourth of a series of posts discussing how to get Google Glass Mirror (Java) and GDK projects set up in various IDEs.

One of the most common questions that I get from people during workshops is how to get set up either the Mirror quick start, or the GDK project into Eclipse, Android Studio or IntelliJ.

This post will cover importing the Java Mirror Quick Start project into IntelliJ.

0. Clone from GitHub

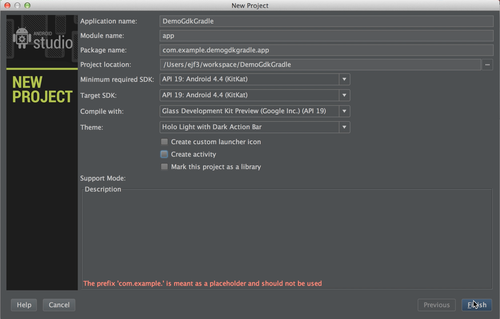

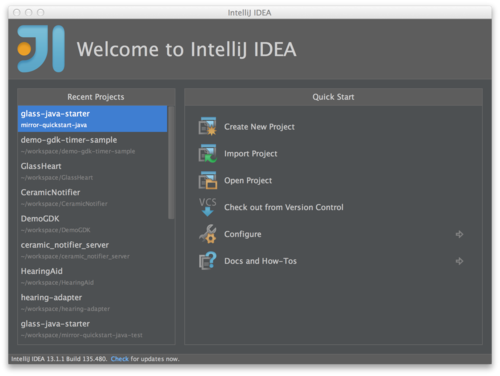

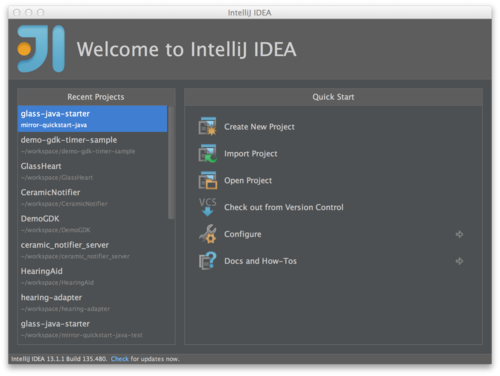

1. Import Project

In IntelliJ, select Import Project.

2. Create project from existing sources

Or you can import it as a Maven project. Either way works

3. Hit ‘Next’ a few times

Then 'Finish’ and you’ll be done with that.

4. Update the oauth.properties file

I previously made a video with instructions on this. Google has also posted instructions for getting OAuth set up.

5. Fire up a terminal

Run the following from the root of the project:

$ mvn install

$ mvn jetty:run

6. Finished!

You’re all done!

IDE Imports Part 3 - Gradle GDK with IntelliJ

IDE Imports Part 3 - Gradle GDK with IntelliJ

This is the third of a series of posts discussing how to get Google Glass Mirror (Java) and GDK projects set up in various IDEs.

One of the most common questions that I get from people during workshops is how to get set up either the Mirror quick start, or the GDK project into Eclipse, Android Studio or IntelliJ.

This post will cover importing a Gradle GDK project into IntelliJ.

0. Clone from GitHub

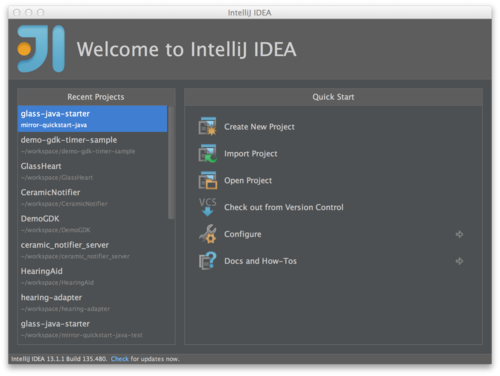

1. Create New Project

In IntelliJ, select Create New Project.

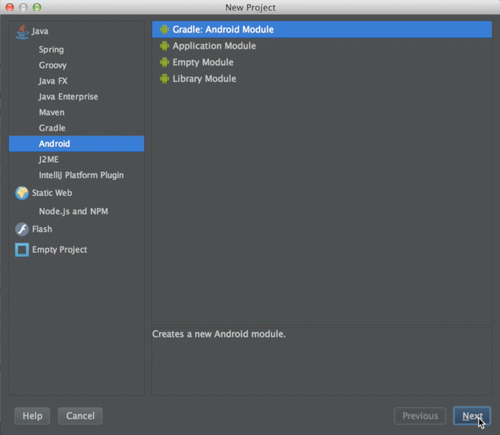

2. Gradle Android Module

3. Set SDK targets

Set all of them to 19, and the ‘Compile with’ to GDK Preview 19.

4. File -> Import Module

Now, we’re going to import the project that we want to work with. Make sure to select the project here.

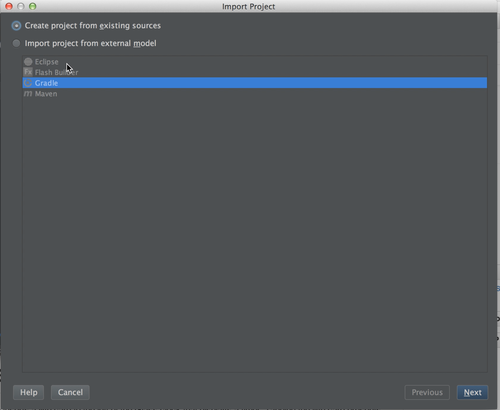

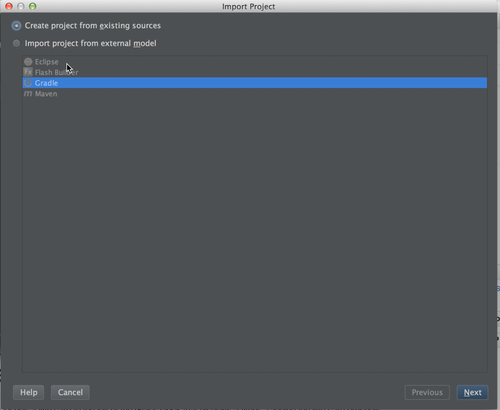

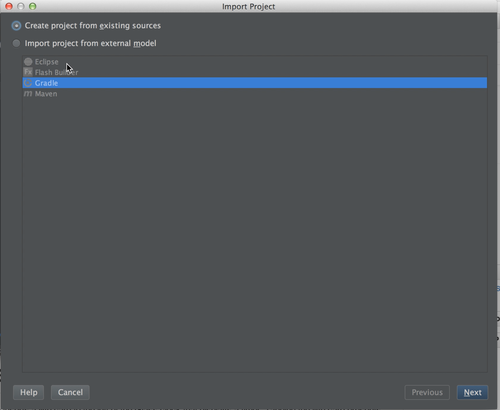

5. Create project from existing sources

Do not import it as an Eclipse, Gradle or Maven project.

6. Hit ‘Next’ a few times

Until it imports.

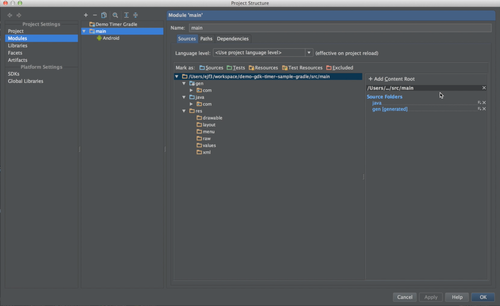

7. Fix project root

- Open the 'Module Settings’ for the imported module

- Go to the 'Modules’ section

- Remove the current 'Content Root’

- Add the correct 'Content Root’, which is the base directory of the module

- Make sure sources and resources are annotated correctly

- Hit 'OK’

8. Move imported module into root of the previously created project

This should fix the build.gradle file, you should see it go from red to white (or black). Now, you can also delete the dummy module that’s in there already.

9. Add compileSdkVersion to build.gradle

The correct value is:

compileSdkVersion "Google Inc.:Glass Development Kit Preview:19"

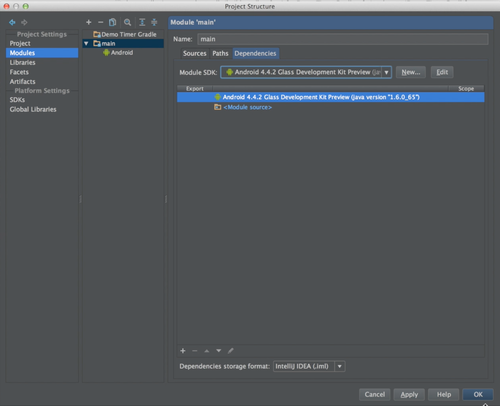

10. Select GDK 19

Go back into the Module Settings, then select our imported module, and the 'Dependencies’ tab. From the drop-down, pick GDK 19.

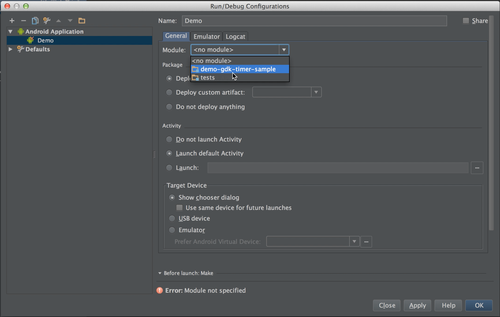

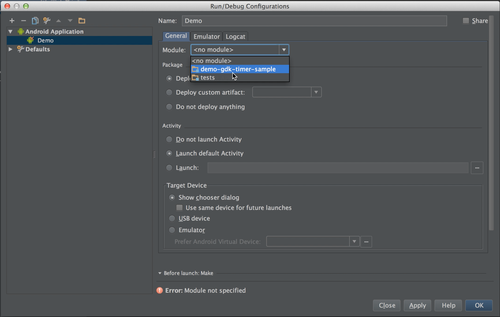

11. Add a Run Configuration

12. Fix the AndroidManifest.xml

Need to have one Activity that is considered the launch Activity, otherwise IntelliJ won’t let you run.

13. Finished!

You’re all done!

IDE Imports Part 2 - legacy GDK with IntelliJ

IDE Imports Part 2 - legacy GDK with IntelliJ

This is the second of a series of posts discussing how to get Google Glass Mirror (Java) and GDK projects set up in various IDEs.

One of the most common questions that I get from people during workshops is how to get set up either the Mirror quick start, or the GDK project into Eclipse, Android Studio or IntelliJ.

This post will cover importing a legacy style GDK project into IntelliJ.

0. Clone from GitHub

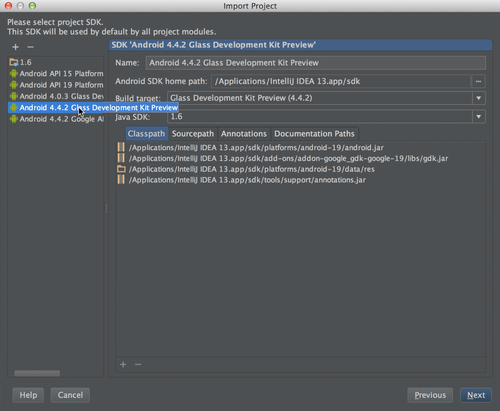

1. Import Project

In IntelliJ, select Import Project.

2. Create project from existing sources

Do not import it as an Eclipse, Gradle or Maven project.

3. Hit ‘Next’ a few times

Until you get to the Project SDK screen.

4. Select GDK 19

5. Hit 'Next’ a few more times

Then 'Finish’ to create the project.

6. Add a Run Configuration

7. Fix the AndroidManifest.xml

Need to have one Activity that is considered the launch Activity, otherwise IntelliJ won’t let you run.

8. Finished!

You’re all done!

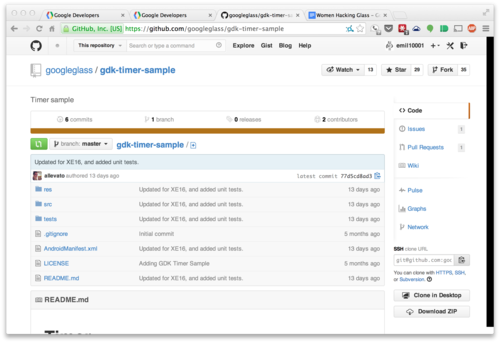

IDE Imports Part 1 - git clone

IDE Imports Part 1 - git clone

This is the first of a series of posts discussing how to get Google Glass Mirror (Java) and GDK projects set up in various IDEs.

One of the most common questions that I get from people during workshops is how to get set up either the Mirror quick start, or the GDK project into Eclipse, Android Studio or IntelliJ.

This first post will simply cover pulling down a project from GitHub.

1. Find the GitHub page of the project that you want to download

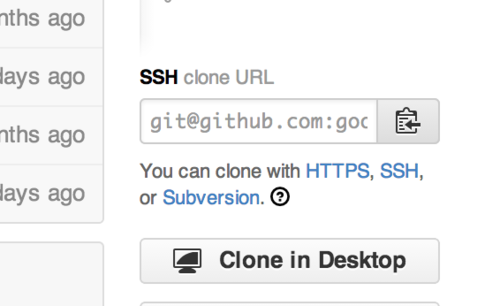

2. Copy the Clone URL

3. Go to a terminal

git clone <clone url>

4. Finished!

That’s it! You’re all set!

Hearing Aid Glassware

Hearing Aid Glassware

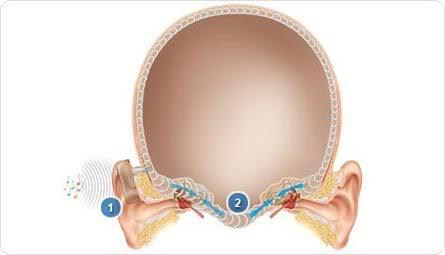

Last night, I received an interesting email from Ajay Kohli. I met Ajay at the CodeChix Glass workshop back in February. He’s a Med Student, working at Kaiser, as well as a Glass Explorer. When I met him, he was very excited about the possibilities for using Glass in hospitals to assist physicians with all sorts of things, in particular during surgery. Anyways, his email mentioned that he was working with a cochlea surgeon, and they were discussing a rather interesting application for Google Glass. Apparently, bone conduction is a viable method to enable some people with hearing loss to hear, and there is currently a class of hearing aids that uses bone conduction. What’s interesting is that Google Glass uses bone conduction with its built-in speaker. Ajay was wondering if I knew of any Glassware or source code that could enable Glass to be used as a hearing aid.

I looked into it a bit, and initially found an old project that didn’t work. I figured that it probably wouldn’t be much effort to write something to do this, and I was not wrong. Here’s the entire code (sans resources) for my hearing aid Glassware:

You can check out the full project, and download the apk to try it out for yourself on GitHub.

Update

David Callaway had his father, who is hard of hearing and uses hearing aids, test this out. According to him, it actually works!

Update 2

Ajay Kohli used this for actual clinical trials! (I’m pretty thrilled that something that I did was studied scientifically.) Looks like Glass won’t be replacing hearing aids any time soon:

We did a functional gain test (hearing test with and without the glass on) yesterday on a bilateral mild conductive hearing loss. No significant improvement in hearing with the glass. We did a unilateral conductive hearing loss speech in noise test. No major improvement with glass as well.

At this point the limitations for glass as a hearing assistance device seem to be 1) available power (gain of making the bone conductor vibrate as fast as possible) and 2) possible processing power, if that processed speech cannot catch up to the live stimulus. So in a controlled audiology setting, these variables would have to be addressed to achieve hearing aid potential for glass.

Google Glass Mirror API Java Quick Start

Google Glass Mirror API Java Quick Start

I recently ran a workshop at Andreessen Horowitz, which was an introduction to Google Glass. It was a lot of fun, so I figured that I would create a screencast out of the content. This is the second of two posts on the subject. This one is discussing the Mirror API Java Quick Start. Part 1 may be found here.

Related Material:

- All slides: http://goo.gl/IvzY0P

- Code: http://goo.gl/1w5XpR

- MenuBuilder: http://goo.gl/lJe2cF

- Blog: http://goo.gl/AOGVc8

A high-level look at the Mirror API

A high-level look at the Mirror API

I recently ran a workshop at Andreessen Horowitz, which was an introduction to Google Glass. It was a lot of fun, so I figured that I would create a screencast out of the content. This is the first of two posts on the subject. This one is discussing the Mirror API at a high level. Part 2 may be found here.

Related Material:

- All slides: http://goo.gl/IvzY0P

- Code: http://goo.gl/1w5XpR

- MenuBuilder: http://goo.gl/lJe2cF

- Blog: http://goo.gl/AOGVc8

Next, take a look at part 2, walking through the Java Quick Start for Mirror

Why buy Google Glass?

Why buy Google Glass?

… or any other smart wearable?

There have been several recent posts on how Google Glass is a failure, written by so-called tech experts. Here’s a quick list, I’m sure you can find more:

- How Google Fumbled Glass — and How to Save It

- +Robert Scoble - Context.

- One Of Google’s Biggest Fans Calls Glass ‘An Expensive Nightmare’

On top of that, one third of early wearable adopters have stopped wearing them already. What’s going on here, I thought that wearables were going to be the next big thing?

They are, but we’re not there yet.

Right now, we are at the beginning. It doesn’t surprise me at all that wearables are not proving useful enough to use on a daily basis, the truth is they’re not useful enough yet.

What’s more, the article discussing the decline in use was looking at activity tracking devices. It doesn’t surprise me that people might lose interest in activity tracking after doing it for a few months and not seeing much improvement. I don’t log into my Basis dashboard very often these days, though I do wear the device daily. Regardless, I think that it is more useful if we focus on smart wearables, and specifically Glass here.

Let’s have a brief history lesson.

Consider the case of smartphones, when they were popularized several years ago. When Android launched, standalone GPS units were relatively expensive, and the iPhone didn’t have a decent navigation solution. Android came onto the scene and provided a really, incredibly useful app, Maps, which included real-time navigation. (Actually, I don’t remember exactly if Maps provided this out of the gate, but I do remember buying a standalone GPS app on Android, where you could download cached maps for $30 on my G1.) Both products also opened the door to over-the-top messaging and calling products that have driven prices for those services down across the board. Both platforms also opened up new markets for casual gaming, which barely existed before. They allowed you to read the news on the train without needing to buy a newspaper. There are countless other things that smartphones are doing these days that people really wouldn’t want to live without.

That said, it took time for people to realize these use cases, and to begin to rely on their phones more and more. The performance out of the gate with the G1, and I assume the original iPhone, left a lot to be desired. The hardware limitations made it difficult to imagine a world where we have more people coming online for the first time with mobile phones than computers. I had an idea that they could be that useful, but until recently, I hadn’t seen a compelling enough combination of hardware and software to get me to reach for my phone over my laptop (or even my tablet). Glass (and other smart wearable platforms) will have to get over the same hump.

Frustrations with Glass are not failures, they are opportunities.

It’s true that Glass doesn’t have any/enough killer apps yet. There are a handful of cool apps for Glass, LynxFit, WinkFeed, Shard, my app Ceramic Notifier, and of course the camera, Google+, Hangouts, Google Now, and Gmail. For Glass to be a good value proposition to consumers, there need to be at least a few things that you can do with Glass that you simply could not do without it.

It will probably be at least a couple of years before we’ve had enough time to build out the ecosystems for these platforms, and to even think about what these killer apps might be. The truth is that these new wearable platforms are very different from smartphones. They may run similar software stacks, but people interact with them completely differently. What I’m trying to get out of the Explorer program is to learn what these behaviors are going to look like. I want to understand this new paradigm from the perspective of the user, along with all the frustrations.

Frustrations with Glass are not failures, they are opportunities.

Why buy Google Glass, or other smart wearables right now?

My answer is if you’re a developer, looking for a billion dollar opportunity, this is the space to be in. Otherwise, wait a little bit. Smart wearables still be there in six months or a year, and they’ll be better.

My take on NPR's Glass story

My take on NPR’s Glass story

NPR ran a story on distracted driving and legal challenges with Google Glass on All Things Considered

The piece highlights some of the legal issues, states proposing legislation that would mark wearing Glass on the road as distracted driving. Unfortunately, the politicians do not seem to understand Glass, and the Explorer that they interviewed was allowing himself to be distracted by Glass while driving, reading off Field Trips information and claiming that it was OK because the screen was transparent.

My personal view is that it is a lot easier to not be distracted by Glass than it is to not be distracted by your phone while driving. Where Glass allows you to quickly dismiss unnecessary information, where the phone requires more interaction to get and dismiss the same information. From there, it depends on what sort of information you’re getting. A lot of times, with an email or text message, Glass can read you aloud what the content is, so that you don’t need to look at the screen. Responding also does not require you to be looking at the screen.

The device can be either much less distracting, or as distracting as a phone, depending on how you use it. I think that the Glass team needs to tackle this problem head on, and make sure that Explorers have no illusions about what constitutes a distraction. They also need to do a better job of communicating all of these things to the public. Finally, they should add some APIs, for developers, around being able to determine if navigation mode is enabled. From there, developers might choose not to surface information, or delay information based on ETA. E.g., never me Field Trips, or news while driving.